Importance of Multi-Cloud with AI and GPUs

Kenji Kaneda - Chief Architect, CloudNatix

The importance of multi-cloud strategies is skyrocketing with the rise of AI and GPUs. The scarcity and high cost of GPUs make them one of the most critical resources for AI companies. To succeed, companies must optimize their GPU supply chains effectively.

Why Multi-Cloud for GPUs?

Relying on a single GPU provider is risky and limits flexibility. Given the ongoing GPU shortage, there is no guarantee that you can always secure the GPUs needed for your training and inference workloads. Unless you pay extra to reserve GPUs, which is often difficult to plan in advance, you risk delays and inefficiencies.

How can these challenges be addressed? The answer is to adopt a multi-cloud approach. By leveraging multiple cloud providers, companies can:

Access GPUs from major providers like AWS, Google Cloud, and Azure.

Utilize specialized GPU providers such as CoreWeave, RunPod, and Lambda.

Explore alternative accelerators like AMD GPUs, AWS Inferentia, and TPUs.

With so many options available, multi-cloud strategies enable companies to build robust and cost-effective AI infrastructure.

Take Cohere as an example. Their journey exemplifies how multi-cloud can empower AI innovation. You can watch their presentation "From Supercomputing to Serving: A Case Study Delivering Cloud Native Foundation Models" from CloudNative and AI Day for more insights (link). See also a keynote from CERN and Google that demonstrates a use case of multi-cluster job dispatching on a public cloud and on-premise (link).

A New Multi-Cloud Strategy

Multi-cloud has been discussed since the early days of the Cloud Native movement. Yet, most implementations fall short of true integration. Typically, even when companies operate across multiple clouds, these environments are managed independently (often as a result of acquisitions or organizational silos). Moving workloads between providers rarely justifies the implementation and operational costs for workloads that use CPUs, which are abundant and rarely face scarcity issues.

With GPUs, however, the narrative is shifting. AI workloads demand a dynamic, federated approach to multi-cloud. Imagine training and inference jobs seamlessly running wherever GPUs are cheapest or most available. For startups, this could mean leveraging platforms offering credits or incentives. A true multi-cloud GPU federation platform would make these possibilities a reality.

Challenges for Platform Teams

While multi-cloud strategies offer immense value to companies and empower data scientists, they present significant challenges for platform teams. Key challenges include:

Efficient GPU utilization: GPU federation is one critical approach to maximizing GPU utilization. Federating GPUs across multiple clouds, however, requires advanced scheduling and orchestration capabilities to ensure training and inference workloads run efficiently and cost-effectively.

Operational complexity: While Kubernetes provides a unified API, its operation mechanisms vary across providers like EKS, AKS, and GKE. Differences in add-on management, upgrade processes, and operational tools create fragmentation. No single dashboard effectively manages Kubernetes clusters across multiple providers, forcing DevOps teams to navigate different systems.

CloudNatix: A Multi-Cloud Platform for AI and GPUs

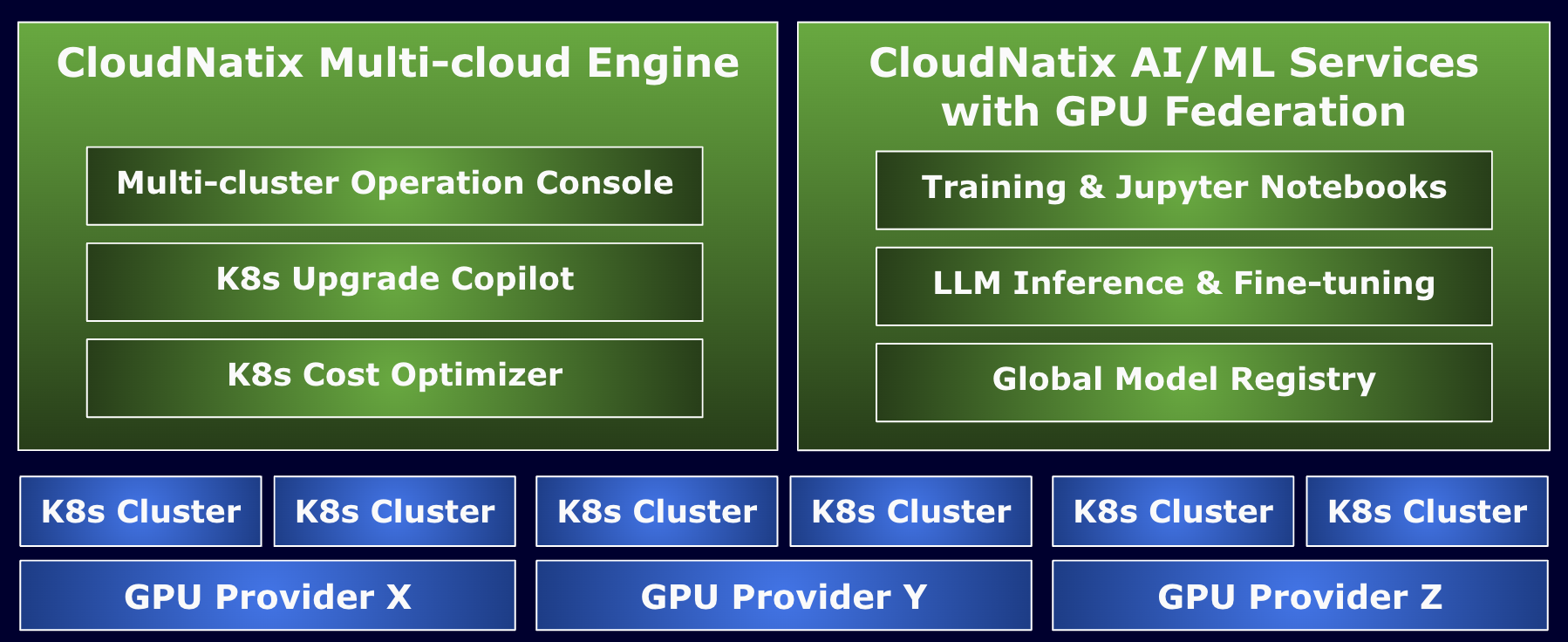

Since its inception in 2020, CloudNatix has been a leader in multi-cloud platforms. Last year, we expanded our capabilities to address the unique needs of AI and GPUs, delivering a true multi-cloud solution for the AI infrastructure.

Key features of CloudNatix include:

Unified platform: Build and manage a cohesive platform across multiple cloud providers, ensuring Kubernetes clusters are managed efficiently and at low operating costs.

Dynamic GPU federation: Automatically optimize GPU utilization for training and inference workloads based on cost, availability, and performance metrics.

Seamless installation: The installation is straightforward and avoids changes to underlying network and security configurations. For instance, there is no need to open incoming ports or expose the Kubernetes API server to the public internet. CloudNatix seamlessly integrates with Kubernetes clusters within private networks.

By simplifying multi-cloud operations, CloudNatix empowers platform teams to focus on innovation rather than grappling with complexity.

Conclusion

The era of multi-cloud for AI and GPUs is here. As companies strive to optimize their AI infrastructure, multi-cloud strategies are no longer optional, but they are essential. With solutions like CloudNatix, organizations can overcome the challenges of multi-cloud and unlock the full potential of their AI and GPU investments.

Are you ready to embrace the future of multi-cloud AI? Learn more about how CloudNatix can help your team succeed.