Seamless AI Infrastructure: Day 2 Management and AI Development with CloudNatix

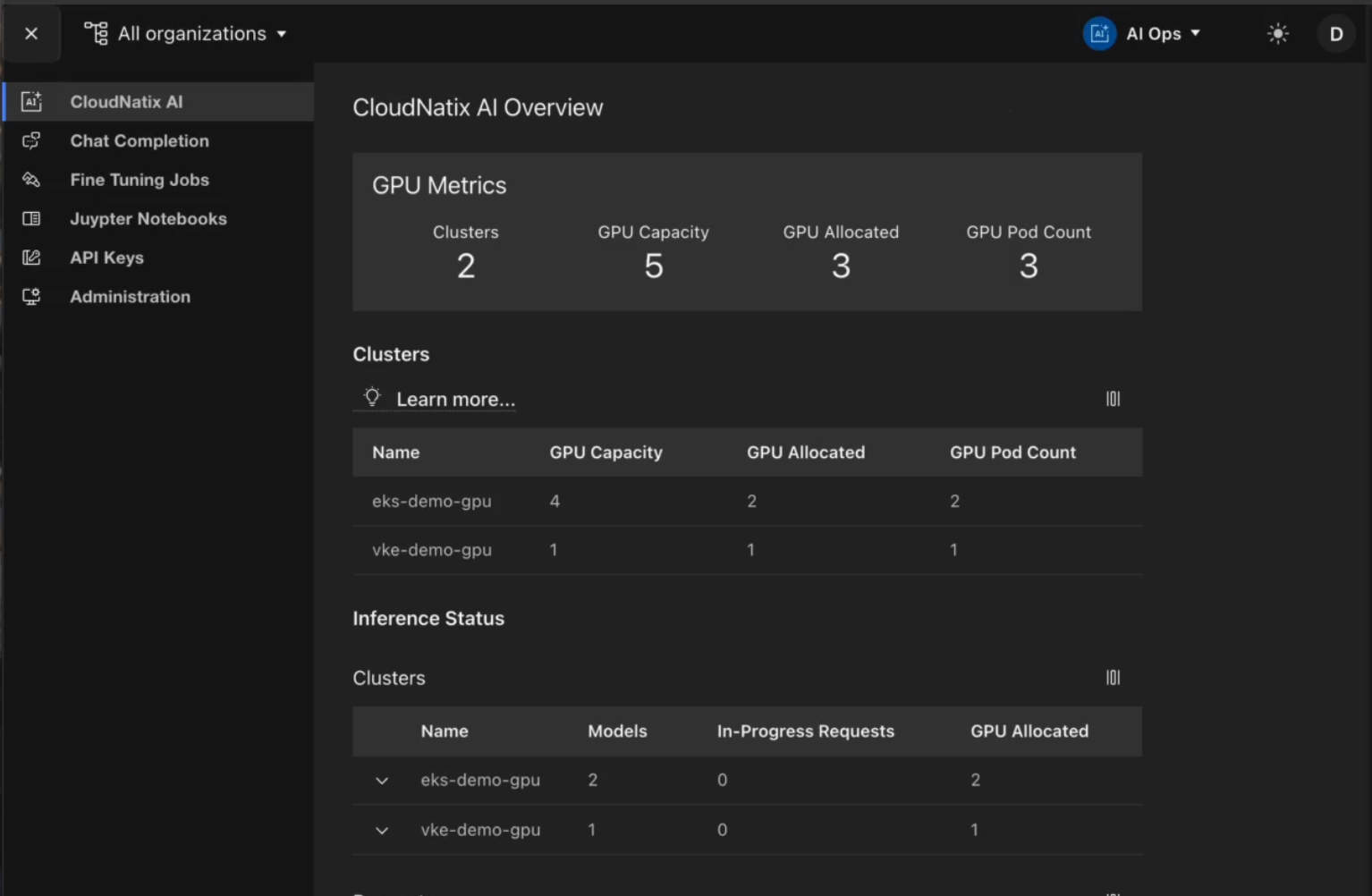

CloudNatix’s AI overview

By John McClary (john@cloudnatix.com) 03/16/2025

Building and managing LLM infrastructure can be complex, especially across multiple cloud providers. CloudNatix simplifies the process, offering a unified platform for AI development, deployment, and management—all while ensuring optimal cost and performance.

A Complete AI Stack in One Platform

With CloudNatix AI, you get:

✅ Multi-cloud support—Host GPUs across AWS, Vultr, and more, seamlessly pooled into a federated system.

✅ Infrastructure control—Manage Kubernetes clusters, monitor workloads, and operate directly on pods.

✅ AI Ops tools—Fine-tune models, test them in Jupyter Notebooks, and interact via a built-in chat interface.

✅ Application integration—Connect hosted models to tools like Open WebUI and automation platforms like n8n.

Watch the Demo

In our latest demo, we show how CloudNatix streamlines LLM hosting and operations, from setting up infrastructure to deploying and fine-tuning AI models. Whether you’re training custom models or running inference workloads, CloudNatix provides an end-to-end solution that minimizes complexity while maximizing efficiency.

🚀 Watch the demo now and see how CloudNatix can power your AI workflows.

For any inquiries, please contact:

Email:contact@cloudnatix.com

Website: https://www.cloudnatix.com/

Follow us on LinkedIn: https://www.linkedin.com/company/cloudnatix-inc